In March 2018 a horrible and sad crash occurred in Mountain View CA, when a Tesla Model X being driven on Autopilot drove itself into the gore point of the left hand exit from US-101 to CA Hwy 85. The driver had the car on autopilot mode, and at the time of the accident his cell phone was actively being used. The assumption is he was playing on his cell phone and not paying attention to the car. Another issue is certain road configurations, in that time period, can fool the Tesla autopilot software into doing the wrong thing.

In February 2020, the NTSB released a report![]() about the accident. It’s from this report that I learned the phrase “passive vigilance”. It correlates with the hunch I had about what partial self driving systems like Tesla’s autopilot would do. Namely, because the partial self driving system (Tesla Autopilot) takes over so much of the driving task, drivers will be lulled into overly trusting the system.

about the accident. It’s from this report that I learned the phrase “passive vigilance”. It correlates with the hunch I had about what partial self driving systems like Tesla’s autopilot would do. Namely, because the partial self driving system (Tesla Autopilot) takes over so much of the driving task, drivers will be lulled into overly trusting the system.

As the NTSB report puts it:

Research shows that drivers often become disengaged from the driving task for both momentary and prolonged periods during automated phases of driving.

NTSB: Collision Between a Sport Utility Vehicle Operating With Partial Driving Automation and a Crash Attenuator Mountain View, California March 23, 2018

The NTSB goes on to note four crashes they investigated where the Tesla Autopilot partial automation was the critical factor. Drivers were inattentive, and were not supervising the Autopilot system nor the road conditions around them. For example in two cases the drivers took no evasive actions as “semitrailer vehicles” crossed the path their car was taking.

At the time I wrote a post titled Self-driving vehicles need to crawl before they walk, and walk before they run. In it, I argued that “we” and the industry were moving too rapidly towards self driving cars, and that more caution was required.

The NTSB report documents failings of both the Tesla Autopilot system, and of the driver, which led to the crash. The Autopilot steered the car into a danger zone, then did not detect the danger, and did not warn the driver. But the driver in the March 2018 crash in Mountain View was inattentive and failed to supervise the car as required. Instead, the driver was actively using his cell phone to play a game.

The NTSB report writes:

Following the investigation of the Williston crash, the NTSB concluded that the way the Tesla Autopilot system monitored and responded to the driver’s interaction with the steering wheel was not an effective method of ensuring driver engagement.

NTSB: Collision Between a Sport Utility Vehicle Operating With Partial Driving Automation and a Crash Attenuator Mountain View, California March 23, 2018

As a result the NTSB developed recommendation H-17-42, which they summarize as:

H-17-42: Develop applications to more effectively sense the driver’s level of engagement and alert the driver when engagement is lacking while automated vehicle control systems are in use.

But, of the six automakers, Tesla is the only one which has not responded to this recommendation. To compound the issue, this week Tesla announced a plan to move ahead with the release of the Full Self Driving software. One wonders about the rush.

What brought me to revisit this is a Twitter Thread![]() by a Twitter user, @TubeTimeUS. He did a good job of summarizing what happened, starting with the post you see pictured at the top of this article.

by a Twitter user, @TubeTimeUS. He did a good job of summarizing what happened, starting with the post you see pictured at the top of this article.

Revisiting the March 2018 Tesla Autopilot crash in Mountain View, CA

Let’s start by revisiting the crash in question.

This NTSB diagram is much better than what I drew back in 2018. At that section of US 101, there are three southbound lanes for primary traffic, and one southbound lane for HOV vehicles. The Tesla Model X was in the HOV lane, because of California’s policy to reward EV drivers with access to that lane. There is a two-lane exit to the side leading to CA Highway 85, and a one-lane exit to the left going to the HOV lane on Hwy 85. The driver was in the US-101 HOV lane, and therefore next to the left-hand exit.

The left-hand exit involves a long approach to a ramp that goes over US-101. The NTSB image shows an area marked “Gore Area”. It is a little confusing, because that “gore area” is effectively a potential lane of traffic which people often take at the last minute upon realizing they’re about to miss their exit. All that separates the Gore Area from official lanes is the painted markings on the road. At the end of the Gore Area is a concrete barrier between the ramp and the US-101 lanes.

In my earlier report I included some videos showing Tesla’s on autopilot making mistakes in similar situations. The autopilot software uses cameras and software to detect lanes in the road. But the software is not entirely accurate, and in some cases the software decides to follow the wrong lines on the road.

In this case the Tesla Model X got confused by the left-hand exit lane. It steered the car into the Gore Area, when it should have stayed in the lane. The driver is supposed to be attentive to the car, and to correct the car with the steering wheel. But the driver did not do so.

Here’s a time-line of the final 10 seconds before the fatal collision.

The left-hand side covers the time period from 9.9 seconds to 4.9 seconds before the crash, and the right-hand side covers the last 4.9 seconds.

At the time, the cruising speed was set to 75 miles/hr, and the Model X was following another car which was 83 feet ahead. The speed at the time was between 64 miles/hr and 66 miles/hr, so less than the 75 miles/hr cruise control speed. The car had been configured to use the minimum following distance.

At about 5.9 seconds before the crash, the Tesla Autosteer mechanism initiated a “left steering input” that brought the Model X into the Gore Area. Again, what should have happened is for the driver to irritatedly steer the car back into the lane, and mumble about the buggy Autopilot software. But, no driver input was detected at the steering wheel.

At about 3.9 seconds before the crash, the Autopilot software detected there was no car ahead. That’s because the car had left the US 101 HOV lane, and was pretending the Gore Area was a lane of traffic. Because it detected no car ahead, the car started accelerating to reach the 75 miles/hr cruise speed.

The Forward Collision Warning system did not detect the concrete barrier. No alert was given to the driver. No attempts to evade were made by either the Autopilot or the driver to avoid collision. Instead, the car was accelerating at the time of the collision, and the driver died.

The NTSB report also notes there were two iPhones in the car. One, an iPhone 8s, was being actively used to play a game. Over the previous several days, the driver was actively playing the game between 9AM to 10AM during his commute to work. In the 11 minutes before the crash, the phone recorded 204 kilobytes per minute of data transfer, consistent with online game play.

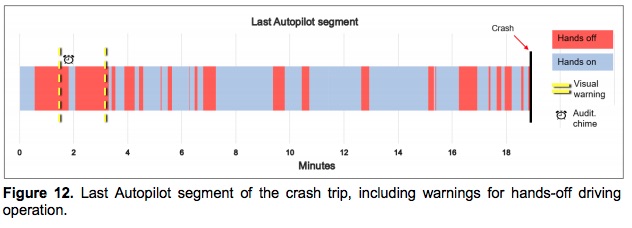

The NTSB report includes this log of hands on versus hands off driving in the preceding several minutes. In the last four minutes his hands were off the steering while a lot, enough that the car gave two visual warnings.

The report also notes that the driver reported the Autopilot system had frequently made mistakes at that interchange, by driving into the Gore Area. The NTSB says data collected from the car showed two similar events, on March 19, 2018 and February 27, 2018. In both cases the car steered to the left at the Gore Area, but within two seconds the driver steered the car back into the HOV lane. In other words, this driver knew of the risk, and knew how to handle it, but on the morning of the crash was too busy playing his game.

Attending to Autopilot systems

The Tesla Autopilot system is not a Full Self Driving system. It is a driver assist feature, which drivers are required to monitor at all times. The Autopilot system is supposed to reduce driver fatigue by eliminating some of the mental burden of driving. But the driver is required to pay attention.

Autopilot uses hand pressure on the steering wheel to detect whether the driver is paying attention. The NTSB report says several times that’s not sufficient. As TubeTimeUS![]() notes on Twitter, many Tesla owners are irritated at the warnings calling them “nanny nags“, and they exchange notes on how to defeat the warnings to drive “hands off” for longer periods of time.

notes on Twitter, many Tesla owners are irritated at the warnings calling them “nanny nags“, and they exchange notes on how to defeat the warnings to drive “hands off” for longer periods of time.

These existing requirements in Europe cover what an autopilot system must do to warn drivers who ignore their responsibility to monitor the car. Cars with this sort of feature are required to give escalating warnings to the drivers.

Drivers who see this as a “nanny nag” are playing with their lives.

It would be like living in a Pandemic period during which “wearing a mask” would limit the spread of the disease. Avoiding disease in such a case requires vigilance, but instead some might equate requirements to wear a mask with Fascism. In extreme cases some might even hold protest rallies against the Fascism of instituting a mask wearing requirement to mitigate the public health emergency.

Avoiding supervising a car with partial driving automation features is just as stupid as not wearing a mask during a pandemic.

The danger of passive vigilance

The NTSB report assigns the probable cause for this accident as the Autopilot system steering the Model X into the Gore Area, the driver failing to supervise the car, and the Tesla Autopilot failing to adequately detect driver inattentiveness. While that’s a fair assessment, the conclusion skips over a very important idea that it briefly mentioned in passing.

“Passive Vigilance” is the result of being required to watch for a rare event, and to respond within a couple seconds. The task requires vigilance for days on end during which there is nothing to do, and requiring quick response for the once-a-month time the danger event does occur.

On Twitter, TubeTimeUS described it this way:

The “watch for a pink gorilla” scenario is a very good summary of driving a car with partial self driving capability.

Consider, the driver in question had experienced similar mistakes with their car in March and February before the fatal accident. This means his car made that mistake about once a month. Every other day of each month the car presumably drove through that interchange without problem, and he arrived at his office without problem, having played video games all the way to work without incident.

This sounds lulling ones-self into a false sense of security.

Driving a car with no driver assist features is understandably mentally fatiguing. In heavy traffic you’re constantly negotiating space with the car drivers around you, with a constant risk of collision. Tesla claims that Autopilot takes some of that burden, and therefore relieves the driver of some of that mental fatigue.

But it’s understandable that driving a vehicle that partly takes over some of the driving chore, that you’ve gotten yourself onto a slippery slope. The more you trust the car, the more you let it do, and apparently the less you’ll pay attention.

Your last second of life may look like this:

This image is of a test driver in Uber’s self-driving vehicle development program. In 2018, this Uber driver was letting the car drive around Phoenix AZ at night, when their car struck and killed a pedestrian who’d stupidly jaywalked to cross a road. The driver was supposed to be monitoring the car’s behavior, recording data into some instruments, but was instead streaming a television show on Hulu.

- The USA should delete Musk from power, Instead of deleting whole agencies as he demands - February 14, 2025

- Elon Musk, fiduciary duties, his six companies PLUS his political activities - February 10, 2025

- Is there enough Grid Capacity for Hydrogen Fuel Cell or Battery Electric cars? - April 23, 2023

- Is Tesla finagling to grab federal NEVI dollars for Supercharger network? - November 15, 2022

- Tesla announces the North American Charging Standard charging connector - November 11, 2022

- Lightning Motorcycles adopts Silicon battery, 5 minute charge time gives 135 miles range - November 9, 2022

- Tesla Autopilot under US Dept of Transportation scrutiny - June 13, 2022

- Spectacular CNG bus fire misrepresented as EV bus fire - April 21, 2022

- Moldova, Ukraine, Georgia, Russia, and the European Energy Crisis - December 21, 2021

- Li-Bridge leading the USA across lithium battery chasm - October 29, 2021

David, I feel like this article, like all articles on the subject, need to start with the fundamental premise: do you want humans driving, or do you want autonomous driving, or do you want a blend? Every speck of evidence is that, above all, you don’t want humans driving. Tens of thousands die and hundreds of thousands are maimed in the U.S. every year due to the human error. I believe full near-safe autonomous driving is coming remarkably quickly, and will be here soon. Until then, a blend of human and autonomous will have to do. But what you really, really don’t want is humans driving. An imperfect autonomous system is far, far better than the horrible human driving we’ve seen throughout history.

Here’s an angle: based purely on miles driven (though I don’t know that it is necessarily going to be a pure comparison, as autonomous rarely handles all driving), what is the rate of accidents, and the rate of avoided accidents, on a per miles basis, in a comparison between humans, blended, and full autonomous?

Pingback: Tesla under Dept of Transportation scrutiny over Autopilot system – The Long Tail Pipe