Clearly folks are eager to have self-driving cars TODAY if not sooner. I’m seeing a constant chatter online of folks excited about the prospect of letting their car take over the driving chores. A few weeks ago, a new Tesla Model 3 owner giving a presentation about his newly-purchased car to my local Electric Auto Association meeting, expressed an interesting thought — self-driving cars can make it possible for older folks to keep driving even as their ability to see and react to traffic diminishes. The news is full of stories about this self-driving autonomous vehicle test or that one, which cities are supporting that research, which aren’t. A few years ago Apple was supposedly developing such a car, but seems to have backed off from that plan. Other companies like Faraday Future, Lucid Motors, SF Motors, are springing up speaking a vision of fully autonomous driving.

I think this is a mad rush to adopt a technology that isn’t quite ready. Two events over the last two weeks give enough evidence to demonstrate my concern.

Before getting angry at what I’m about to say – read it carefully. Please consider that I’m not negative on the idea of autonomous vehicles. There are many potentially huge benefits to self-driving autonomous driving. I’m concerned about rushing headlong into something before it’s fully baked.

In one case an autonomous test car operated by Uber struck and killed a pedestrian, while the occupant was distractedly fiddling with his phone or something. A critical screen capture is shown above, and a dashcam video is shown below. In the other event, a Tesla Model X driver on US 101 in Mountain View CA died after his car drove directly into a concrete lane divider. It seems the car got confused because of a somewhat confusing left-hand exit lane causing the left-hand-lane to split into two lanes, and the car sought to split the difference ending up driving directly into the divider.

Autonomous Uber pedestrian fatality

The first fatality accident involving an autonomously driven car occurred in Tempe Arizona in mid-March 2018. It was fairly late in the evening, on a Sunday night, when a pedestrian pushing a bicycle “suddenly” walked onto the roadway. According to an early news report![]() , the driver told Police the pedestrian walked out in front of the car, and that “His first alert to the collision was the sound of the collision.” The Police went on to suggest the darkness and shadows along the road would have been a challenge even for an alert human.

, the driver told Police the pedestrian walked out in front of the car, and that “His first alert to the collision was the sound of the collision.” The Police went on to suggest the darkness and shadows along the road would have been a challenge even for an alert human.

That’s well and good, except that the dashcam video (see below) makes it clear the driver wasn’t paying any attention to the road. His attention was on something near waist level, probably a cellphone, with occasional glances at the road, until it was too late. This is not an attentive responsible driver.

Compounding the problem is that the pedestrian did not act responsibly. He should have looked at the road before trying to cross, and should have gone the extra 100 yards to get to the crosswalk a short distance away. In the video the pedestrian seems distracted with pushing the bicycle whose handlebars were holding grocery bags.

The driver had probably become lulled into a false sense of security – that this autonomous driving system would take care of things, so that the driver could abdicate his responsibility to save vehicle operation.

Another report![]() quotes forensic experts saying that a human driver could have avoided the accident, and that sensors on the car should have detected the pedestrian. According toBryant Walker Smith, a University of South Carolina law professor, the incident “strongly suggests a failure by Uber’s automated driving system and a lack of due care by Uber’s driver (as well as by the victim).” Mike Ramsey, a Gartner analyst, said “Uber has to explain what happened. There’s only two possibilities: the sensors failed to detect her, or the decision-making software decided that this was not something to stop for.”

quotes forensic experts saying that a human driver could have avoided the accident, and that sensors on the car should have detected the pedestrian. According toBryant Walker Smith, a University of South Carolina law professor, the incident “strongly suggests a failure by Uber’s automated driving system and a lack of due care by Uber’s driver (as well as by the victim).” Mike Ramsey, a Gartner analyst, said “Uber has to explain what happened. There’s only two possibilities: the sensors failed to detect her, or the decision-making software decided that this was not something to stop for.”

Over the years I’ve been in a large number of traffic accidents, both as a passenger and as a driver. The key takeaway I have from those experiences, including nearly dying twice, is that in every accident someone or multiple someones weren’t paying attention, and had abdicated their responsibility to safety on the road.

Bottom line – the software systems failed, and the driver failed, and the pedestrian failed, all failed to be responsible for road safety.

Tesla Model X driving into a lane divider

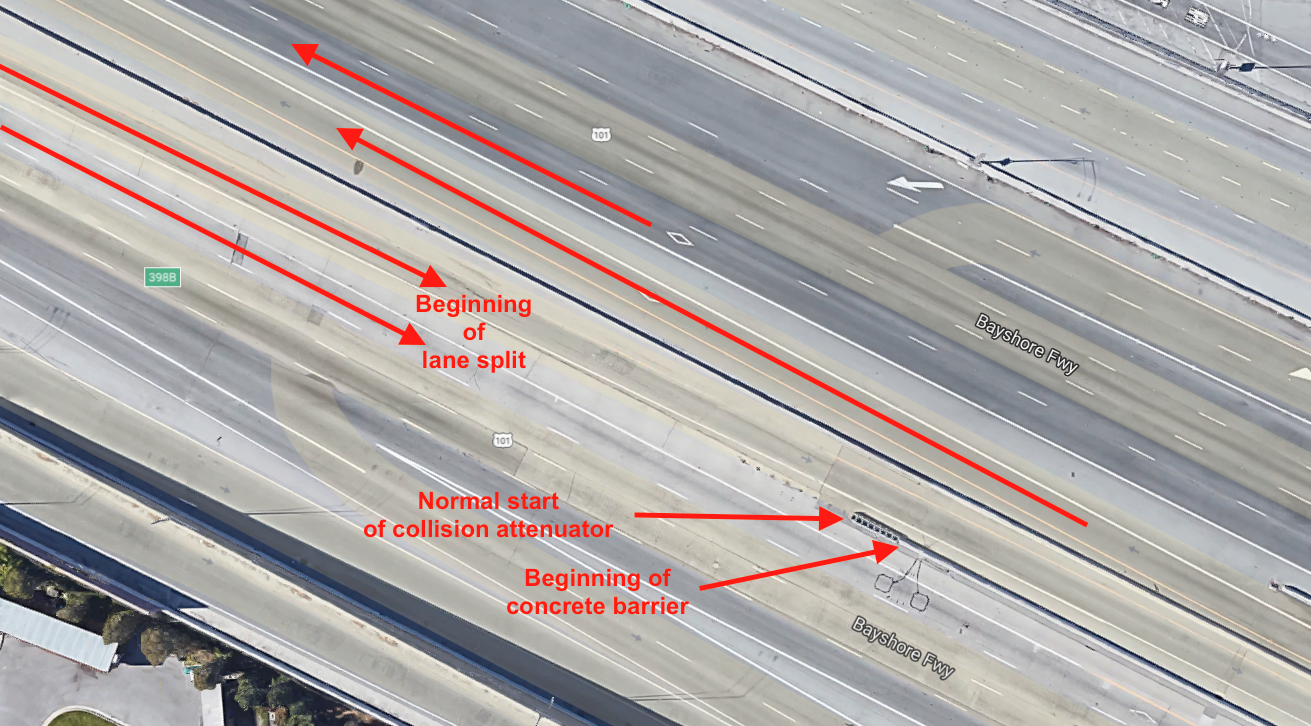

On Friday March 23, a Tesla Model X drive straight into a concrete lane divider at a spot where there’s a left-hand exit ramp from US 101 taking drivers from the US 101 HOV lane into the HOV lane on CA Hwy 85. It’s a somewhat confusing junction even in the best of conditions.

Tesla Model X crash in Mountain View, drove directly into the concrete barrier shown here. The energy absorption system normally at this junction was missing. Source: NBC BayArea

A local news report![]() has video taken by a “Good Samaritan” who was on the scene. He and another man dragged the car driver to safety before the Model X burst into flames. It’s likely the battery pack caught fire – a not unexpected result because the entire front of the car was torn away, and obviously the battery pack was damaged. Police posted the following image showing the normal condition of that exit ramp:

has video taken by a “Good Samaritan” who was on the scene. He and another man dragged the car driver to safety before the Model X burst into flames. It’s likely the battery pack caught fire – a not unexpected result because the entire front of the car was torn away, and obviously the battery pack was damaged. Police posted the following image showing the normal condition of that exit ramp:

That metal section with the orange warning symbol is meant to absorb any impact at that point, lessening the chance of injury. But it’s reported the crash attenuator, an energy absorbing system, was missing, meaning the car hit the concrete directly.

Annotated satellite view of the collision location. It’s difficult to show with this map, but the lane division is not the best design. Source: Google Maps satellite view

The severity of the crash is partly because the collision attenuator was missing. Tesla stresses this point in its two blog posts concerning the fatal collision (post 1![]() , post 2

, post 2![]() ). The company also stresses the safety record – that “In the US, there is one automotive fatality every 86 million miles across all vehicles from all manufacturers” and that for Tesla’s cars “there is one fatality, including known pedestrian fatalities, every 320 million miles in vehicles equipped with Autopilot hardware”. This results in a claim that “driving a Tesla equipped with Autopilot hardware, you are 3.7 times less likely to be involved in a fatal accident.”

). The company also stresses the safety record – that “In the US, there is one automotive fatality every 86 million miles across all vehicles from all manufacturers” and that for Tesla’s cars “there is one fatality, including known pedestrian fatalities, every 320 million miles in vehicles equipped with Autopilot hardware”. This results in a claim that “driving a Tesla equipped with Autopilot hardware, you are 3.7 times less likely to be involved in a fatal accident.”

Tesla has posted similar statistics in the past – the number of gasoline car fires per year is over 250,000 in the USA, while there’s a scant few electric car fires per year. The facts seem to be on Tesla’s side, that on average you’ll be safer in a Tesla car with Autopilot engaged than you would otherwise. At this particular stretch of highway, Tesla says their cars have driven here 85,000 times with autopilot engaged, with zero accidents.

By the way – the fact that Tesla has that piece of data says that Tesla is recording data about where Tesla cars are being driven. Keep that thought bookmarked in your mind because it is a Big Brother consideration about personal privacy in the age of internet-connected vehicles.

Consider those statements, and then watch the following video posted by someone in Indiana showing a recreation of the conditions of this accident.

The title of the video is overly alarming – I have no control over that. It shows someone driving at night on a highway with a similar left-hand exit. The car is driving down a highway – watch the road signs and you clearly see they’re driving in Indiana. There is change in the lane markers prior to a left-hand exit lane, and then suddenly the car is driving directly into the gore point, the driver is clearly doing his best to stop the car and avoid hitting the barrier, and he managed to do so with a foot or so to spare.

Tesla Model S nearly drives directly into gore point of an exit lane. Source: cppdan on YouTube

Clearly the conditions can be replicated. This means Tesla should be able to improve the algorithms to better handle this scenario.

According to an ABC7 News report![]() , the car’s owner told his family that 7 out of 10 times the car would try to veer into the barrier. He had taken it into the dealership (and has the invoice to prove so) but Tesla Service was unable to replicate the behavior. A KQED news report

, the car’s owner told his family that 7 out of 10 times the car would try to veer into the barrier. He had taken it into the dealership (and has the invoice to prove so) but Tesla Service was unable to replicate the behavior. A KQED news report![]() has an excellent summary.

has an excellent summary.

According to Tesla’s second update the driver had engaged Autopilot “with the adaptive cruise control follow-distance set to minimum” moments before the collision. The driver had received several warnings earlier in the drive, and his hands were not detected on the steering wheel in the seconds before the collision. The NTSB issued a stern warning to Tesla following this posting, because Tesla was releasing data from an accident investigation before the investigation was complete.

If this is accurate, it raises a question – if the driver was worried about driving through that location, why did he enable autopilot? And, why weren’t his hands on the steering wheel?

It seems to me that Tesla’s posting is designed to raise those questions in our mind, to allay worries, and to shift blame on the driver?

Bottom line is that we’ve seen a reenactment of the scenario, and it seems apparent that Tesla’s Autopilot has a potential failure mode in that condition.

Bottom line is that Tesla has some answering to do regarding whether it did the right thing with this fellow’s concerns.

Bottom line is that maybe the driver engaged in some risky behavior for some unknown reason. If Tesla’s data is accurate, he abdicated his responsibility for safe driving at the last minute.

Bottom line is that this fellow’s family is now left without a Father.

That’s four bottom lines, but it’s a complex story.

Urging for Caution

The title of this post evokes the title of a Computer Science paper from the 1960’s — GO TO Statement Considered Harmful. The author of that paper, Dr. Dijkstra, wrote of how the GOTO statement, while useful, lead programmers to create spaghetti code that was buggier than necessary.

In other words the GOTO statement lulled programmers into writing problematic code.

Similarly, current driver assist features may be lulling drivers into abdicating their responsibility for safety on the road.

There is no vehicle on the market today certified for full autonomous driving. Instead most (or all) the manufacturers offer different sorts of driver assist features that are taking over more driving tasks every year, and most/all the manufacturers promise even more features or full autonomy within a couple years.

For its part Tesla is very careful to use the word Autopilot, and to not make any claim of full autonomous capabilities. The current Autopilot software gives warnings if the driver is detected to not have their hands on the steering wheel, or otherwise doing risky things.

But, it seems some Tesla drivers have been lulled into a false sense of security, that the car will take care of the driving and they can do other things. I say this having read online comments from lots of Tesla drivers.

Must we rush headlong into adopting technology which seems not quite ready? Why not slow things down a little bit, and let the engineering teams work on refining the technology?

- Is there enough Grid Capacity for Hydrogen Fuel Cell or Battery Electric cars? - April 23, 2023

- Is Tesla finagling to grab federal NEVI dollars for Supercharger network? - November 15, 2022

- Tesla announces the North American Charging Standard charging connector - November 11, 2022

- Lightning Motorcycles adopts Silicon battery, 5 minute charge time gives 135 miles range - November 9, 2022

- Tesla Autopilot under US Dept of Transportation scrutiny - June 13, 2022

- Spectacular CNG bus fire misrepresented as EV bus fire - April 21, 2022

- Moldova, Ukraine, Georgia, Russia, and the European Energy Crisis - December 21, 2021

- Li-Bridge leading the USA across lithium battery chasm - October 29, 2021

- USA increasing domestic lithium battery research and manufacturing - October 28, 2021

- Electrify America building USA/Canada-wide EV charging network - October 27, 2021

Very good article. I don’t think you note it, but the striping seen on the still image of the roadway at the accident site seems to differ from the striping on the video image of the roadway (although jeez, the videographer / facebook poster lowers the camera for the final critical seconds). But ultimately, as with uber, clear the full autonomous is not ready for prime time.

And query its usefulness for a host of difficult situations. First, based upon its expected critical function of picking people up and dropping people off, I do not see how it will have the judgment to know when and how to pull over — reading the conditions in such a situation is very important. Second, wherther urban or otherwise, there are always a host of difficult people to get to try to use the autonomous car with good judgment.

As for the uber accident, that was just terrible. Heck, as Volvo point out, the car’s built-in accident avoidance should have prevented this. Which suggests Uber’s system sucks.

But lastly, remember the metric. It is not perfection. If it is merely reducing by even one death the total number of deaths from cars — about 36,000 annually — it will have been worth it.