You might have heard that artificial intelligence a.k.a. machine learning is a big thing in the tech industry. Look for a computer programming job and it seems all the openings are either in machine learning or blockchain (which is a story for another day). Anyone interested in the future of cars knows the car companies are working overdrive to develop autonomous vehicle software and sensors. You might have even heard that self-driving autonomous cars rely on artificial intelligence software. What you might not know is there is a $250 kit with which one can learn about developing machine learning algorithms, and even learn the basics of how machine learning software is used in autonomous vehicles.

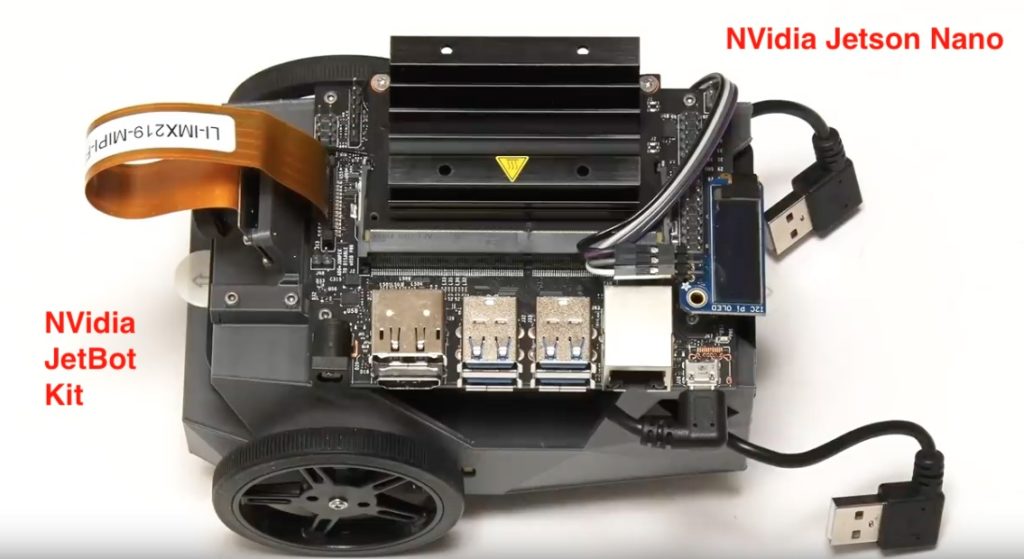

What’s shown above is a kit built using an NVidia Jetson Nano single-board-computer. it is housed in a carrier containing a couple electric motors, a motor controller and a battery pack that form a computer controlled autonomous vehicle. This kit is called the NVidia JetBot.

Overview of the NVidia JetBot kit

The Jetson Nano is a “single board computer”, a term popularized by the Raspberry Pi. These computers have enough power to run a full Linux desktop computer experience in a small inexpensive computer that has low-level input/output capabilities to interface with real hardware. The Jetson Nano includes a high-powered GPU that can be used for software requiring high performance computing, like machine vision applications. NVidia is one of the leading companies for graphics boards, and NVidia’s GPU’s are not just used for graphics but also for high performance mathematical computing. Most machine learning packages can use GPU’s for a huge performance boost. The NVidia Jetson Nano is designed for researchers building AI powered robots and similar hardware projects that utilize AI algorithms. It packs a high powered GPU on a small inexpensive board that can be easily interfaced with real-world hardware.

The JetBot kit is an ingenious combination of low-cost components that together make the basics of an autonomous electric vehicle. There are two electric motors powering a pair of drive wheels, which are controlled by a motor control board commonly used by the DIY “Maker” community. A third wheel is implemented for stability in the form of a roller ball.

A normal 10,000 mAh USB power bank is used as an on-board battery pack. It has enough power deliver capability to supply the Jetson Nano and the drive motors, and gives a fairly decent run time.

A camera mounted to the front serves as a sensor with which the JetBot can sense the world. This camera is directly interfaced to the Jetson Nano.

Using the JetBot kit

Since the NVidia Jetson Nano is a Linux single board computer, you can connect it to a normal monitor, keyboard and mouse, and use this as a desktop computer. You’ll need to do this for at least a few minutes in order to configure the software and some settings on the board.

Under normal usage you’re expected to connect to a web server running on the board. A small information panel on the rear gives the IP address for the web server, and once you’ve connected you can view a Jupyter Notebook based control system.

Jupyter Notebook is a standard machine learning development environment widely used by artificial intelligence researchers. NVidia has developed some Notebook environments with which to learn how to drive the JetBot using artificial intelligence algorithms.

One notebook contains a prebaked “collision avoidance” artificial intelligence model. By running that model, the JetBot will drive itself around the floor, and will avoid running into objects it sees via the camera.

Another notebook allows you to train the collision avoidance algorithm with your own images. In the video attached below the presenter trained the JetBot so it can drive around a table top without falling off the edge of the table.

Artificial Intelligence basics

The attached video manages to show some basics of artificial intelligence, and training AI models. The presenter did not set out to show us how to develop a self driving car, but in effect that’s what we’re seeing. One key aspect of any self-driving car is to detect, using a variety of sensors, any object the car might collide with, and to avoid the collision. While the JetBot does not have enough sensors or algorithms for full autonomy, but it gives us a tiny peek behind the curtain to see how this works.

Modern machine learning practice is to create what’s called an “AI Model” that makes statistical guesses (er… predictions) based on input. They call this “training a model”.

An AI model is trained by collecting data – in this case images captured through the camera. The goal of this experiment is for the JetBot to drive around a table top without falling off. So the presenter collected over 190 images of different angles on the table top, marking each image as to whether it shows whether the JetBot is blocked or not.

The images collected this way are called “training data”. Each image is categorized, by pressing either the green or red button, whether it represents the “blocked” or “not blocked” condition.

With the training the software on the JetBot then trained a new collision avoidance AI model. With that new model the JetBot should be able to drive around that table top without falling off. If it were put on a different table top, the JetBot wouldn’t know what to do since the images collected in this model would not match the images on that other table top.

What’s shown here is a simplified diagram of what’s known as a neural network. Neural networks are a mathematical emulation of, well, neurons. Neurons are of course the basic building block of brains and the sensory systems of animals.

What artificial intelligence researchers do is build artificial neural networks — these ARE the AI Model mentioned earlier.

A neural network takes in a set of numerical inputs at one end. The data is run through a series of calculations dictated by the trained model, and an answer comes out the other end. In this case images captured through the camera are converted into the numbers applied to the input of the model. The trained neural network then recognizes features in the image that result in a prediction as to whether the JetBot is “blocked” or “not blocked”. Based on that prediction the JetBot will either continue driving or change directions.

Conclusion

There’s a 10 year old me from many years ago who would love to have had such a thing. We are starting to live in the future that was promised.

The Jetson Nano is an example – in that for a very small sum of money, one can buy a high powered computing device with which to run powerful AI software with reasonable performance. And with a few dollars more in parts, the device can roll around and drive itself using AI algorithms. What an opportunity our kids have today!

What makes this worthy of LongTailPipe is that this inexpensive kit demonstrates enough about autonomous driving to have a better appreciation for the work being performed by the car manufacturers.

Actual self driving cars have many more sensors and even more powerful computers than what we have with the JetBot. The AI models being developed are far more comprehensive, since each car manufacturer is driving their cars around thousands of real-world situations collecting data all the way.

In case it’s not clear – the images in this post come from the attached video![]() .

.

- The USA should delete Musk from power, Instead of deleting whole agencies as he demands - February 14, 2025

- Elon Musk, fiduciary duties, his six companies PLUS his political activities - February 10, 2025

- Is there enough Grid Capacity for Hydrogen Fuel Cell or Battery Electric cars? - April 23, 2023

- Is Tesla finagling to grab federal NEVI dollars for Supercharger network? - November 15, 2022

- Tesla announces the North American Charging Standard charging connector - November 11, 2022

- Lightning Motorcycles adopts Silicon battery, 5 minute charge time gives 135 miles range - November 9, 2022

- Tesla Autopilot under US Dept of Transportation scrutiny - June 13, 2022

- Spectacular CNG bus fire misrepresented as EV bus fire - April 21, 2022

- Moldova, Ukraine, Georgia, Russia, and the European Energy Crisis - December 21, 2021

- Li-Bridge leading the USA across lithium battery chasm - October 29, 2021